Objective: To implement a Gaussian elimination algorithm (without pivoting) to perform a LU factorization of a square matrix in a distributed memory manner using message passing.

For this algorithm we use a simple 1-D block data distribution.

We consider a NxN matrix, A. This matrix

contains single precision elements (float).

The execution takes place on p processes, where p

divides N. Each process thus holds a

N × N/p block column of the matrix. In other words,

the process with rank 0 holds columns 0 to N/p − 1, the process with

rank 1 holds columns N/p to 2N/p − 1, etc.

This module consists of 1 activity:

You should turn in a single archive (or a github repo) with:

Implement a program called lu (lu.c), that implements the

Gaussian elimination (without pivoting) algorithm. You may have covered this algorithm in a course,

and it is of course well-document in various texts. Regardless, a sequential implementation,

sequential_lu.c, is provided. So you can use it as a starting point

in a "let's parallelize an existing sequential implementation" scenario. The overall algorithm for iteration

k is:

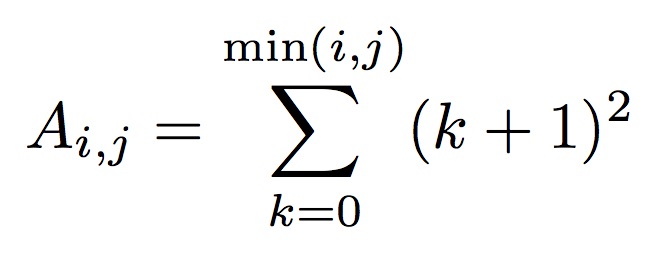

To make it easy to test your implementation, we compute the LU factorization of a particular matrix defined as follows:

The matrix, once the Gaussian Elimination is complete, will have all 1’s in its lower triangular portion,

and i2 values on its diagonal and in its upper triangular portion.

Run lu_sequential.c with a small value of N to see this structure. Your code should check

that these are the values computed by your implementation (each process can do its own check, and the number of

errors can be "MPI_Reduced" as the process of rank 0 to print and overall "success" or "failure" message).

You can use any XML platform file and accompanying hostfile to run your MPI program, since this module is solely about correctness and not performance. For instance, you can use cluster_crossbar_64.xml as a platform file and hostfile_64.txt as a hostfile.

For debugging purposes, remember that it's a good idea to insert calls to MPI_Barrier

so that debugging output from the program can be structured step-by-step.

Hints:

MPI_Bcast() function to perform the broadcastslocal2global() and global2local()

functions that do the translations between local and global indices.Congratulations for making it this far. You have learned to implement a moderately complex algorithm using a 1-D data distribution . This algorithm and its implementation are very typical of parallel numerical linear algebra implementations, but it's a bit simplistic. In fact, many optimization techniques are known to make the parallelization of LU factorization more efficient, in particular to hide the communication latencies. Hiding these latencies one of the themes of Topic #4.

In a README file write a brief "essay" explaining what you found surprising/challenging, and what solutions you used to solve your difficulties. Are you happy with your implementations? Do you see room for improvement?